When talking to the client about the business requirements, it’s always tempting to think that we can do just about anything in the plugins if we really have to.

And it’s true that plugins allow us to implement very powerful customizations, but, on the other hand, that power does not come for free – we may have to pay a certain price since all those plugins will be running on the Dynamics server, and, so, they’ll be slowing down the application.

So I figured I’d do a quick comparison to see how the plugins affect performance just to illustrate what may happen. Imagine an oversimplified scenario (which might not even require a plugin) where we would need to make sure that any time an account record is updated, it will have “TEST” in the description field.

I’ve run 3 different tests to measure average update request execution time in each case:

1. For the first test, I have disabled all the plugins on account “update” to get the baseline. There were 1000 updates, every 100 of them were done using ExecuteMultiple request.

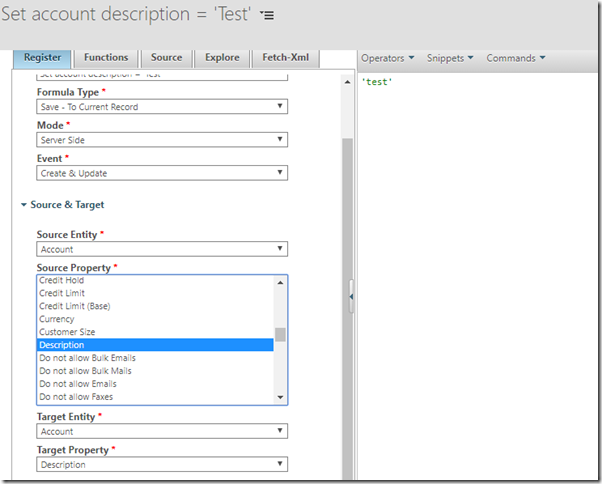

2. For the second test, I used North52 formula

In general, North52 is a great solution that simplifies all sorts of calculations in Dynamics.. but, it seems, I don’t quite agree with their own assessment of the performance impact which you can find here:

You can download a free edition of North52 to use it in your environment – it does have some limitations, of course, but it can still be very useful.

Anyway, here is how the formula looked like:

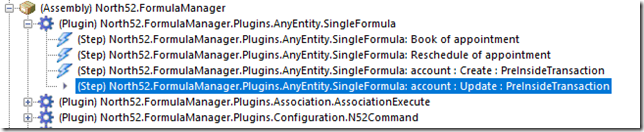

Just keep in mind that, when defining a formula there, you are, actually, registering a plugin step (more exactly, North52 is doing it for you):

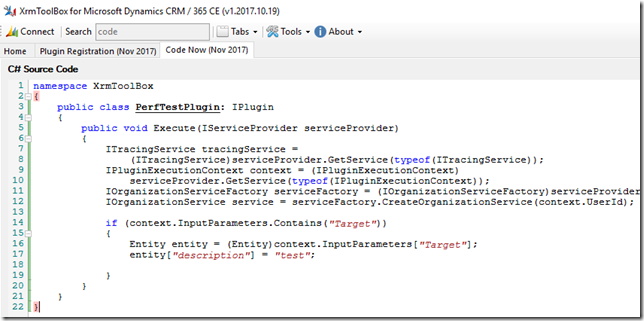

3. For the third test, I used a separate plugin

That one is not doing much – it’s just setting the description field.

And there are a few more things which are worth mentioning:

- When running test #2, the plugin from test #3 was disabled

- When running test #3, the plugin from North52 was disabled

- Finally, I used a console utility for testing – you’ll see the code below:

class Program

{

public static IOrganizationService Service = null;

static void Main(string[] args)

{

var conn = new Microsoft.Xrm.Tooling.Connector.CrmServiceClient(System.Configuration.ConfigurationManager.ConnectionStrings["CodeNow"].ConnectionString);

Service = (IOrganizationService)conn.OrganizationWebProxyClient != null ? (IOrganizationService)conn.OrganizationWebProxyClient : (IOrganizationService)conn.OrganizationServiceProxy;

int testCount = 10;

int requestPerTest = 100;

double totalMs = 0;

for (int i = 0; i < testCount; i++)

{

Console.WriteLine("Testing..");

totalMs += UpdateAccountPerfTest(requestPerTest);

}

double average = totalMs / (testCount * requestPerTest);

Console.WriteLine("Average update time: " + average.ToString());

Console.ReadKey();

//SolutionStats();

}

public static double UpdateAccountPerfTest(int requestCount)

{

Guid accountId = Guid.Parse("475B158C-541C-E511-80D3-3863BB347BA8");

Entity updatedAccount = new Entity("account");

updatedAccount["description"] = "1";

updatedAccount.Id = accountId;

UpdateRequest updateRequest = new UpdateRequest()

{

Target = updatedAccount

};

Service.Execute(updateRequest);

OrganizationRequestCollection requestCollection = new OrganizationRequestCollection();

for (int i = 0; i < requestCount; i++)

{

requestCollection.Add(updateRequest);

}

DateTime dtStart = DateTime.Now;

ExecuteMultipleRequest emr = new ExecuteMultipleRequest()

{

Requests = requestCollection,

Settings = new ExecuteMultipleSettings()

{

ContinueOnError = true,

ReturnResponses = true

}

};

var response = Service.Execute(emr);

return (DateTime.Now - dtStart).TotalMilliseconds;

}

}

All that said, here is how the tests worked out(ran a few tests, actually. Those numbers are the “best” I saw):

- Test#1 (No plugins): 99 ms per update request

- Test#2 (North52): 126 ms per update request

- Test#3 (Separate plugin): 114 ms per update request

So, realistically, it’s almost impossible to notice the difference on any single update request. However, it’s still about 20-30% difference, and it seems to be a little worse with North52 when compared to a dedicated plugin. Which could be expected since North52 would be using some additional calculations. Although, that difference between North52 and dedicated plugin might be just something else since I saw those numbers going a bit up and down in different test runs for both Test#2 and Test#3.

The problem, though, is not those individual calls – it’s when you start looking at the really large number of updates, that’s where 20-30% suddenly become something to keep in mind.

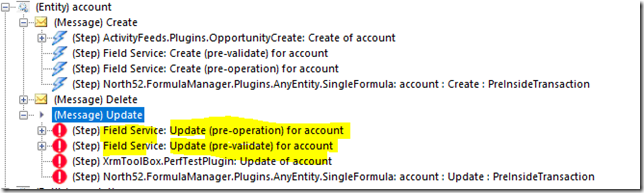

But that’s not all yet.. There are a couple of message processing steps on the account entity that come with the FIeld Service solution, so what if I do the tests again with Field Service plugins disabled?

- Test#1 (No plugins): 27-32 ms per update request

- Test#2 (North52): 59-63 ms per update request

- Test#3 (Separate plugin): 59-63 ms per update request

In other words, when there were no plugins at all, average update request performance was about 3-4 times better. At the same time, North52 seemed to be about as efficient as independent plugins, at least with that simple formula.. Except in one scenario:

Normally, we won’t register plugins to run off just any attribute – we will configure the plugins to run on the update of some specific attributes only. For example, when running Test#2, I tried using only a single attribute (“name”) for the trigger, and I got exactly the same average execution time as if there were no plugins at all. With North52, we can do the same using plugin registration utility, but, it seems, there is no way to do it directly in the Formula Editor. Something to keep in mind then..

So, be aware. You may not notice the difference on every single update/create operation, but, at some point, you may have to start optimizing plugin performance by adjusting the list of attributes they’ll be triggered on, by simplifying the calculations, even by moving those calculations into an external process, etc.

Thanks for the write-up Alex its much appreciated. Nothing better than getting independent analysis.

Some explanations

Test 1: Separate plugin 114ms Vs North52 126ms

Really happy with this as besides going through the whole rules engine the 2 big extra steps we need to do is retrieve the metadata for the entity that the formula is executing against. So that’s a RetrieveEntityRequest with the filters set to Attributes & Relationships. And we need to retieve back the list of applicable formulas for the current event. On these tests that’s a 12ms hit but its well worth it as it allows us to do stuff that helps optimize real world scenarios. See second post.

Test 2: Separate plugin & North52 59-63 ms

Good results here as its the same result. The reference to the one exception in the Formula Editor itself is true. It’s a holdover from CRM 2011 which we have left alone as we still have customers running CRM 2011. We are dropping support for it this December (18 months after Microsoft, we really try to look after our customers) so expect the formula editor to be updated in the new year so you get even more control.

While this was a simple scenario its real-world scenarios where North52 BPA shines. A good example is Metro Bank (a retail bank in the UK) where they have a lot of their day to day operations executing inside CRM Online. North52 BPA performs a lot of their business rules with no performance issues reported in 4 years. And they benefited from the continuity North52 has provided through 4 major CRM platform upgrades.

https://www.north52.com/business-process-activities/customers/metro-bank-case-study/

Deep Performance

For the curious technical folks who want to know how can North52 match or even better the performance of a custom written plugin. There are lots of small things we do but I’d like to focus on one item in the following example.

Because we make that initial entity metadata call & have all the formula logic in memory we can actually pre-scan and optimize items like fetch-xml queries to the database. So you can have consultant\developer number one write a formula to use our FindValue() function (really a fetch-xml query) to retrieve some data and implement some logic.

Then consultant\developer number two performs a very similar FindValue() function call but retrieving back a different data field and implementing logic. Now in our pre-scan we can automatically detect this scenario and merge the two FindValue function calls into a single fetch-xml query. This also works across pipeline stages so that merged fetch queries get executed in Pre-Operation and then we can use the Shared Variables property bag to share the results automatically across 2 different formulas that two-different consultant\developers wrote.

This gives a massive jump in performance for real world scenarios as a lot of plugins query the database and this is one of the most expensive things from a performance viewpoint.

Projects with lots of developers run into the above problem a lot where over the course of months or even years different people implement the logic and it can become spaghetti with lots of different queries hitting the database. We do our best to take this all way, have great performance while letting the consultant\developer do the important bits which is engage with the end customer and understand their needs.

Final comment ?

A recommendation I would make for anyone needing serious performance testing it to look at using some tools like the following,

Testing Performance and Stress Using Visual Studio Web Performance and Load Tests

https://msdn.microsoft.com/en-us/library/dd293540(v=vs.110).aspx

StresStimulus

http://www.stresstimulus.com/

We use Visual Studio mostly but I have played around with StresStimulus and its much easier to get to grips with than Visual Studio.

Hi John,

thank you for the feedback – those are awesome comments (you might want to write up something along those lines on that support page discussing North52 performance). I agree there are better tools for performance testing.. “production grade” if you wish.. but, at the same time, the purpose was not to really explore all the edge cases. It was more to cover the basics. With your comments below, I think it’s all covered now:)

PS. Although.. I’m pretty sure a well-written custom plugin can still beat North52 🙂 Not that they are all well-written, of course..

Great write-up and great comments!

We’re about to do a performance assessment of North52 vs Custom Plugins (vs Sync Workflows) in the coming weeks at our Government of Canada Department where we’re considering North52 as a major productivity enhancer (and cost-reducer!) and this is an excellent reference!

Frankly I’m thrilled/relieved to see how small the difference is knowing the capabilities of N52 and the amount of configuration this will allow us to use rather than custom development. Awesome!