I think whoever came up with the term “prompt engineering” may have misunderstood what “engineering” is 😊 Alternatively, they might have honestly hoped the art of composing those AI prompts would turn into the actual engineering; but, I think, so far it has not happened, and it’s probably unlikely to happen.

Just look at how “engineering” is defined and what it involves:

Engineering | Definition, History, Functions, & Facts | Britannica

The field has been defined by the Engineers Council for Professional Development, in the United States, as the creative application of “scientific principles to design or develop structures, machines, apparatus, or manufacturing processes, or works utilizing them singly or in combination; or to construct or operate the same with full cognizance of their design; or to forecast their behaviour under specific operating conditions; all as respects an intended function, economics of operation and safety to life and property.

It proceeds to mention preparation, training, standards, etc.

And it also mentioned this: “The function of the scientist is to know, while that of the engineer is to do. Scientists add to the store of verified systematized knowledge of the physical world, and engineers bring this knowledge to bear on practical problems”

Now, of course, one can stretch this definition and assume that “prompt design” is “engineering”, too. However, I think one important aspect of engineering is the ability to predict calculated outcomes. Whereas the way your LLM model is going to respond to a specific prompt is not, really, predictable. There are certain techniques you can use to get the outcome you want, but, more often than not, you just can’t say in advance which technique is going to produce the best results.

Funny enough, it’s not as if AI community did not know that. Just look at how prompt engineering is defined at https://learnprompting.org/ :

Prompt engineering is the process of crafting and refining prompts to improve the performance of generative AI models

Now “crafting and refining prompts” makes total sense. With one notable caveat.

As in, how do you know that you are getting correct answer from the LLM if you can’t easily verify that answer? Since, after all, getting the most accurate answer is the purpose of “prompt crafting”, but, if you don’t know whether the answer is correct or not, how long do you keep crafting?

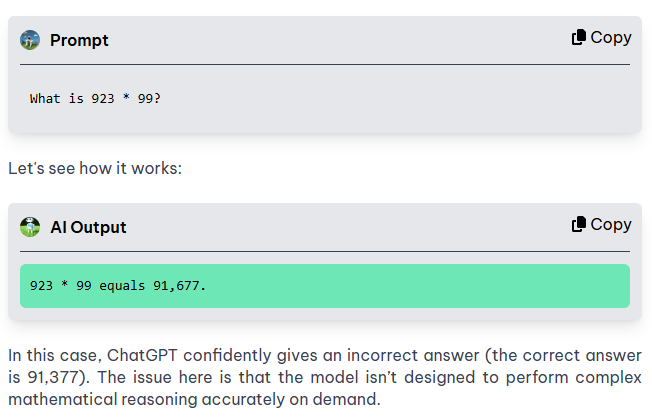

As in (and bear in mind this particular error is not happening anymore if you ask ChatGPT today), look at this example from the learnprompting.org:

Well, it’s great, except that how would you know that answer were incorrect? Of course you could just use a calculator to verify, then you would not need to ask ChatGPT to begin with. But think of the more complex examples – you can ask a valid question and get garbage in return without even realizing what has happened.

And I’m giving that example above not to get into the discussion of how to mitigate LLM overconfidence, but just to illustrate the problem.

Imagine a bridge engineer making this kind of mistake when calculating the highest load, for example. Well, in the worst case scenario the bridge may simply collapse on the first day.

Now imagine a prompt engineer designing incorrect prompt. To begin with, no one may even notice for a while.

So, yes, I’d call it “prompt crafting” or “the art of creating LLM prompts”, but “engineering”?