A new version of the solution lifecycle management whitepaper for Dynamics has been published recently, so I was reading it the other night.. and I figured I’d share a few thoughts. But, actually, if you wanted to see the whitepaper first, you can download it from this page:

https://www.microsoft.com/en-us/download/details.aspx?id=57777

First, there is one thing this whitepaper is doing really well – it’s explaining solution layering to the point where all the questions seem to be answered.

1. It is worth looking at the behavior attribute if you like knowing how things work

There are a few examples of what that behavior attribute stands for, and I probably still need to digest some of those bits

2. There are a few awesome example of upgrade scenarios

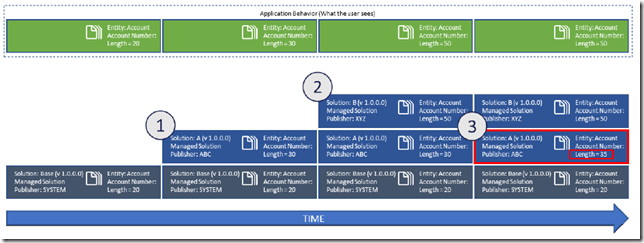

I’ll admit it – I could never fully understand the layering. Having read this version, I think I’m getting it now. Those diagrams are simply brilliant in that sense – don’t miss them if you are trying to make sense of the ALM for Dynamics:

3. There is a lot of detailed information on patching, cloning, etc

Make sure to read through those details even if you are skeptical of this whole ALM thing. It’s definitely worth reading.

Once you’ve gone over all the technical details, you will be getting into the world of recommendations, though. The difference is that with the recommendations you have a choice – you can choose to follow all of them, some of them, or none of them at all.

There is no argument that solution layering in Dynamics is a rather advanced concept, and it’s also rather confusing.

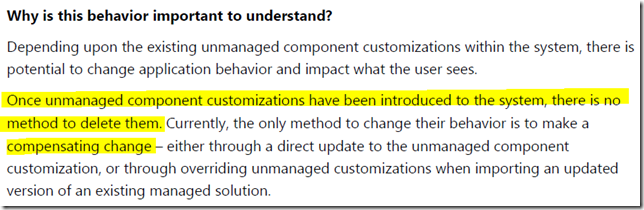

So maybe it’s worth thinking of why it’s there in the first place. The whitepaper provides an idea of the answer:

But I think there is more to it. To start with, what’s so wrong with the compensating changes?

From my standpoint, nothing.

However, where I stand we are developing internal solutions where we know what we are doing, so we can create those compensating changes, and, possibly, script them through the SDK/API, etc.

What if there is a third party solution that needs to be deployed into the environment? Suddenly, that ability to delete managed solutions with all associated customizations starts looking much more attractive. We try it, we don’t like it, so we delete it. Easy.

Is it, though?

As far as “delete” strategies go, any organization that has even the weakest auditing requirements will likely revoke “hard delete” permissions from anyone leaving “soft delete” (which is “deactivate”) option only. And, yet, when deleting a managed solution, you are not deleting just the solution itself. If there are any attributes which are not included into the other solutions, you’ll be losing the attributes, the data in them, and the auditing logs data for them. And what if you delete the whole entity?

So, deleting a solution can easily turn into a project of its own if you still want to meet your auditing requirements once it’s all done and over, since, technically, you’d need to run a few tests, to analyze the data, to talk to the users, to confirm with the regulations.. might be easier and cheaper to not even start this kind of project.

If you eventually decide to delete a component, managed solutions can help because of the reference counting. Dynamics will only delete a component (an attribute, for instance), once there are no references from the managed solutions. Which is an extra layer of protection for you.

Still, here is at least some of what you lose when you start deploying managed solutions:

- You can’t restore your dev instance from production backup, since you won’t be able to export from the restored instance

- There are some interesting side-effects of the “instance copy” approach, btw. Imagine you have marketing enabled in production, but it’s not something you need or want to really enable in dev. Those licenses are rather expensive, after all. Still, you might want to update a form for marketing, so you would that solution in dev. You could bring it to dev through the instance copy. Marketing in general wouldn’t work because of the missing license, but all customizations would still be in dev that way, so you’d be able to work with those customizations

- When looking at the application behavior in production, you have to keep layering in mind. Things might not be exactly what they look like since there can be layers over layers, and it may even depend on the order in which different solutions have been deployed

- So.. managed? Or unmanaged? I don’t think it’s been settled once and for all yet. Although.. if the solution is for external distribution, I’d be arguing in favor of “managed” any time.

Then, there is the question of instances. To start with, they are not free anymore.

The idea of having an instance per developer works perfectly well in the on-prem environments, but, when it comes to the online instances, this is where (at least for now), we have to pay. Is it worth it? Is it not worth it? It’s definitely making it more difficult to try.

And then there is tooling

Merging is the most complicated problem in Dynamics. As soon as you start getting multiple instances you have to start thinking of how to merge all the configurations. Of course you can try segmentation, but it’s not a holy grail. No matter how accurate you are in making sure that everyone in your team is only working on their own changes and there is no interference, it will still happen. There will still be a need to merge something every now and then.

SolutionPackager is trying to address that need and, in mind mind, does a wonderful job. Just that’s not, really, enough. You can export your solution, you can identify where the changes are by looking at the updated XML files, and, then, if there are conflicting changes, you have to fire up a Dynamics instance and do the merge manually there. Technically, you are not supposed to manually merge those XML files(you can try.. but, if you wanted to, you’d have to have a very advanced understanding of those files in the first place). So it’s useful, but it’s not as if you were using source control to merge a couple of C# files.

Then, for the configuration data, you have to introduce a manual step of preparing the data. There were a few attempts to make this an automated process, including this tool of my own: http://www.itaintboring.com/tag/ezchange/ But, in the end, I tend to think that it might be easier to store that kind of configuration data in CSV format somewhere in the source control and use a utility to import that data to Dynamics as part of the deployment (and, possibly, do the same even for dev environment). So your dev instance would not be the source of this data – it would be coming right from a CSV file

All that said, I think the whole reason for this complexity is not that Dynamics has not been trying to make things easier

It’s just a very complicated task given that Dynamics is a platform, and there can be many applications running on that platform in the same instance. They all have to live together, comply to some rules, follow some upgrade procedures, and don’t break each other in the process.

So, to conclude this post.. Of course it would be really great if some kind of API were introduced in Dynamics to do all the configuration changes programmatically, since you know how it goes with the customization.xml – manual changes are only supported to a certain extent.. Maybe it’ll happen at some point – I can’t even start imagining what tools the community would come up with then.

For now, though, make sure not to ignore this whitepaper. Whether you agree with everything there or not, there is a lot of technical knowledge there that will help you in setting up your ALM processes for Dynamics.