Sometimes I think that Microsoft Cloud is not quite a cloud – it’s, actually, more like an ocean (which is, probably, similar to how things are with other “clouds” to be fair).

As an on-premise consultant, I did not use to appreciate the depth of Microsoft cloud at all. As a Power Platform consultant, I started to realize some of the extra capabilities offered by the Power Platform, such as:

- Canvas Applications

- Power Automate Flows

- Different licensing options (can be good and bad)

- Integration with Azure AD

Yet I was suffering quite often since, you know, there is “no way I can access the database”.

And, then, I tried the Dataflows recently. Which took me on a little different exploration path and made me realize that, as much as I’m enjoying swimming in the familiar lake, it seems there is so much more water out there. There is probably more than I can hope to cover, but I certainly would not mind going on a cruise and see some of it. So, this post is just that – a little cruise into the cloud ETL/ELT capabilities:

And, by the way, normally, you don’t really do deep diving on a cruise. You are out there to relax and see places. Here is the map – there will be a few stops, and, of course, you are welcome to join (it’s free!):

Stop #1: On-Premise ETL tools for Dynamics/Power Platform

If you have not worked with Dynamics on-premise, and I am assuming it’s about time for the pure-breed cloud consultants to start showing up, on-premise ETL tools might be a little unfamiliar. However, those are, actually, well-chartered waters. On-premise ETL tools have been around for a long time, and, right off the top of my head, I can mention at least a few which I touched in the past:

- SSIS

- Scribe(now Tibco – thank you Shidin Haridas for mentioning they were acquired)

- Informatica

They all used to work with Dynamics CRM/Dynamics 365 just fine. Some of them turned into SAAS tools (Scribe online, for example), and some of them took a different route by merging into the new cloud tools (SSIS). Either way, in order to use those tools we had to deploy them on premise, we had to maintain them, we had to provide required infrastructure, etc. Although, on the positive side, the licensing was never about “pay per use” – those tools were, usually, licensed per the number of connections and/or agents.

We are still just near the shore, though.

Stop #2: PowerPlatform ETL capabilities

This is where we are going a little beyond the familiar waters – we can still use those on-premise ETL tools, but things are changing. Continuing the analogy, the cruise ship is now somewhere at sea.

Even if you’ve been working with the Power Platform for a while now, you might not be aware of the ETL capabilities embedded into the Power Platform. As of now, there are, actually, at least 3 options which are right there:

- Power Automate Flows

- Dataflows

- Data lake export

And, of course, we can often still use on-premise tools. After all, we are not that far from the shore. Though we are far enough for a bunch of things to have changed. For example, this is where an additional Power Platform licensing component kicks in since Power Apps licenses come with a certain number of allowed API calls.

Still, why would I call out those 3 options above? Technically, they are offering everything you need to create a ETL pipeline:

- A schedule/a trigger/manual start

- A selection of data sources

- A selection of data destinations

Well, data lake export is special in that sense, since it’s hardwired for the CDS to Azure Data Lake export, but, when in the cloud, that’s an important route, it seems.

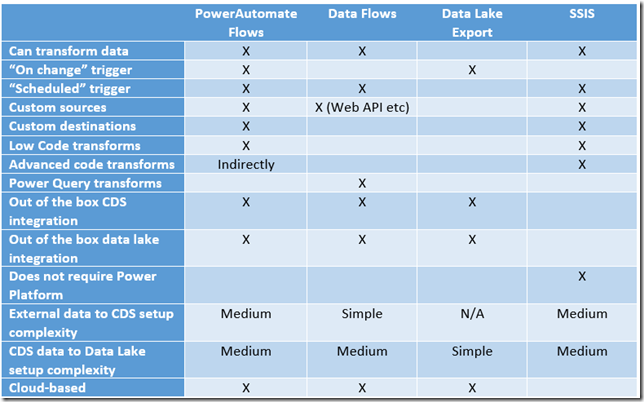

How do they compare to each other, though? And, also, how do they compare to the on-premise ETL tools (let’s consider SSIS for example):

The interesting part about Data Lake Export is that it does not seem to have any obvious advantages over any of the other tools EXCEPT that setting up CDS to Data Lake export looks extremely simple when done through “data lake export”.

Stop #3: Azure Data Factory

Getting back to the analogy of Azure being the ocean, it should not surprise you that, once in the ocean, we can probably still find the water somewhat familiar, and, depending on where we are, we might see familiar species. Still, the waters are certainly getting deeper, and there can be some interesting ocean-only life forms.

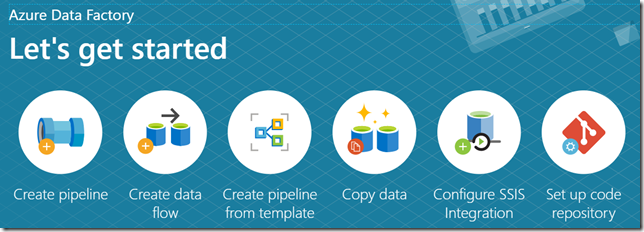

Hey, there is one just off the port side… Have you seen Azure Data Factory? That’s a real beast:

This one is strong enough to survive in the open waters – it does not care about Power Platform that much. It probably thinks Power Platform is not worth all the attention we are paying it, since here is what Azure Data Factory can offer:

- It has data flows to start with

- It can copy data

- It has connectors

- It has functions

- It has loops

- It is scalable

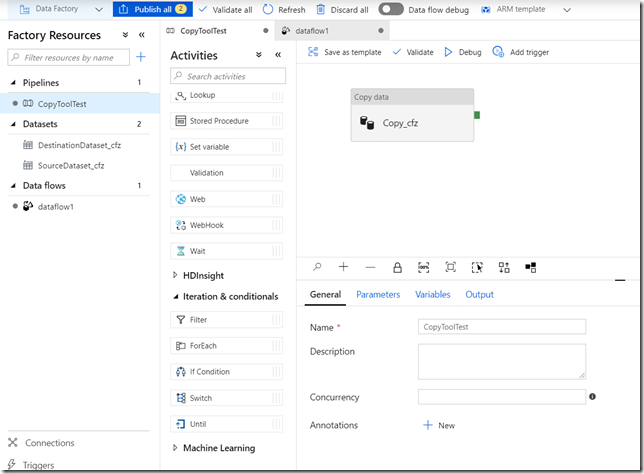

- Pipeline designer looks somewhat similar to SSIS

- It can actually run SSIS packages

- It allows deployment of self-hosted(on-premise) integration runtime to work with on-premise data

- It offers pipeline triggers

- If has the ability to create reusable data flows

- It has native support for CI CD (so, there is dev-test-prod)

And I think it has much more, but, well, it’s a little hard to see everything there is to it while on a cruise. Still, this screenshot might give you an idea of what it looks like:

In terms of data transformations, it seems there is a lot more one can do with the Data Factory than we can possibly do with the Dataflows/Data Lake Export/Power Automate Flows.

Although, of course, Data Factory does not really care about the Power Platform (I was trying to show it Power Platform solutions, and it just ignored them altogether. Poor thing is not aware of the solutions)

Finally, going back and relaxing in the sun…

It’s nice to be on a cruise, but it’s also great to be going home. And, as we are returning to the familiar Power Platform waters, let’s try putting all the above in perspective. The way I see it now, and I might be more than a little wrong, since, really, I did not have an opportunity to do a deep dive on this cruise, here is how it looks like:

- SSIS will be becoming less and less relevant

- Azure Data Factory will take over (probably has already done it)

- Power Platform’s approach is almost funny in that sense. And, yet, it’s extremely useful. Following the familiar low code/no code philosophy, Power Platform has introduced its own tools. Which often look like simplified (and smaller) versions of their Azure counterparts, but which are meant to solve common Power Platform problems, and which are sometimes optimized for the Power Platform scenarios (environments, solutions, CDS data source, etc). The funny part there is that we, Power Platform consultants, are treated a little bit like kids who can’t be trusted with the real things. But, well, that approach does have some advantages:)

Excellent round of up all these options, Alex – thanks for writing. It does indeed look a bit overwhelming with all these new products/options coming up, hard to keep up with and even harder to do a comparison.

We have been using Azure Data Factory since almost a year now as part of D365 implementations but it looks like Data Flows is a better option. We also had customers using Data Export Service (DES) to export D365 data to Azure SQL database for extensive PowerBI reporting but perhaps Export to Data Lake is a better option (not sure, maybe?)

Another interesting bit that I found recently, in this particular context, is Connections (in make.powerapps.com) – where you can create connections to whole bunch of things. This only works with Canvas apps right now, but if it gets available on model-driven too (not sure if it is in roadmap?), that’ll be another game-changer.

Do you have any rough ideas on the cost. Data Lake might have all the functionality but its significantly more expensive then customers won’t like it.

It has classic azure consumption costs but without an actual example I’m not sure how these stack up.

great article

Other than trying a price calculator, no ideas really. Would be great to hear from someone who’s using the Data Lake (and the export, I guess)

Hey Alex

SSIS can also be mounted on Azure Data Factory. KingswaySoft does it beautifully. As Ben said in his comment, in architecture discussions not all decisions are made based on functionality. Costing and also capabilities to extend/customise play a big role in making final decisions.

Hi Jandost, I agree, although, you can also create an ADF pipeline which works with Dynamics/CDS without SSIS/Kingsway. This whole area seems to be filled with Microsoft tools to the point where they are just competing with each other:) In that sense SSIS, as reliable as it is, kind of looks “old” so I wonder… anyways, I am actually using SSIS on another project because of client preference.